Why This Matters

Video can no longer be trusted as proof.

In May 2025, Google released Veo 3. It’s a text-to-video engine that lets users create eight-second clips real enough to pass for truth.

The launch shattered belief. A milestone for AI video. A turning point for how we see the world. A century of visual certainty cracked in an instant.

The age of video proof is over. It died the day Veo 3 went live. From now on, every clip is suspect. In courtrooms and culture alike, video shifts from fact to probability.

The eight-second cap isn’t a safeguard. It’s a speed bump.

This changes everything. Acting. Advertising. Political persuasion. Surveillance, too. The global film-and-video-production market was valued at roughly $297 billion in 2024, and every dollar of it now carries an asterisk.

This essay begins with the specs. Then it follows the implications. And it ends with one hard question:

When proof is gone, what remains?

The Real That Isn’t

These aren’t people. They’re prompts.

The video above, “The Prompt Theory” by Putchuon - "Put You On" is entirely AI-generated. One of dozens making the rounds this week, it showcases the devastating realism of Google’s new Veo 3.

The lighting is exact. The lips align. The eyes carry weight. You search for glitches, some small tell of fakery. You find none.

This wasn’t filmed. It was prompted into being. No source to trace. No actor to call. No tape to rewind.

These aren’t people. They’re computer-generated “actors” reciting lines someone typed into a keyboard, and they look good enough to fool even the most discerning eye.

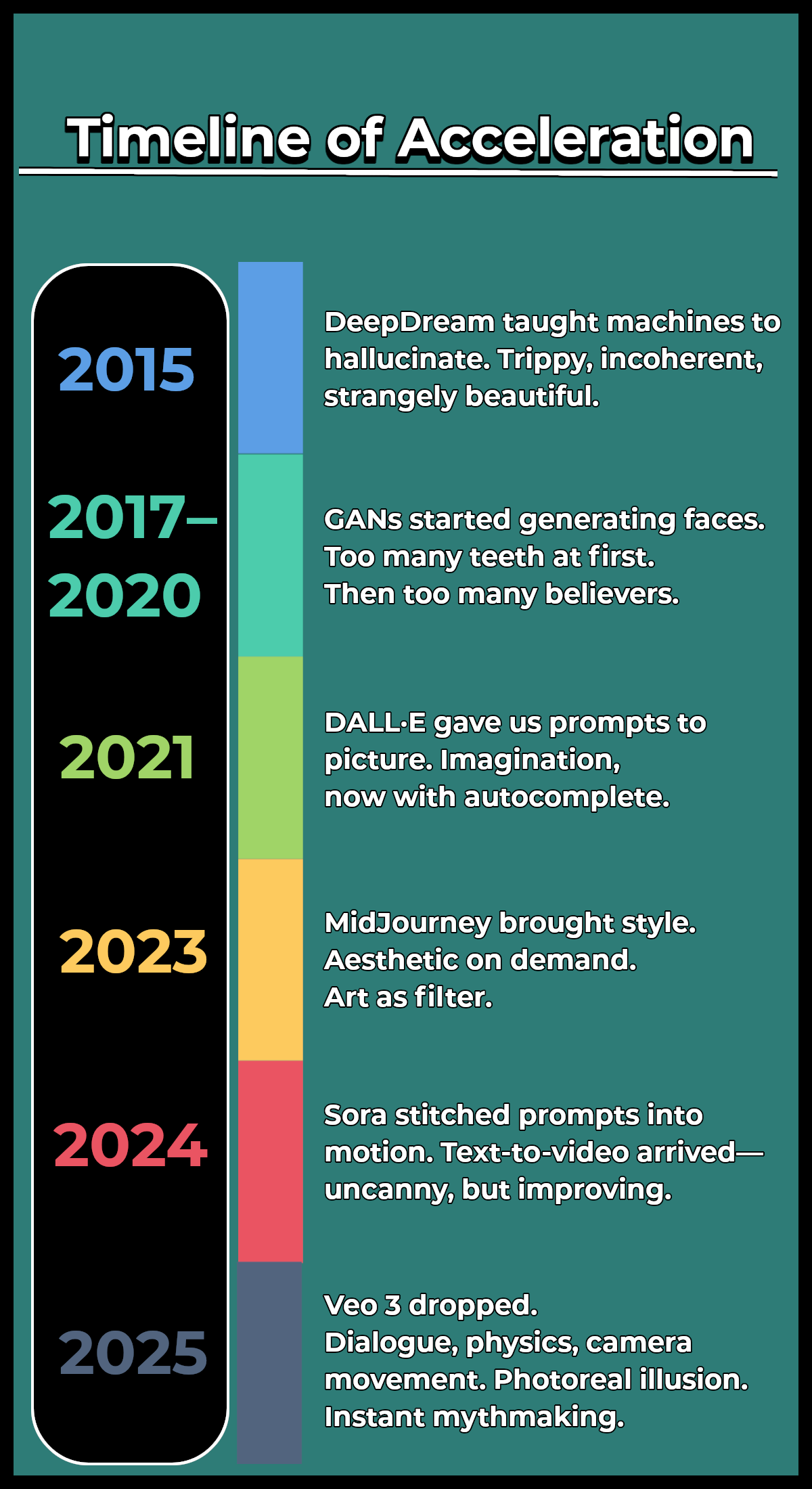

This didn’t come from nowhere. It came fast.

What Veo 3 Actually Does

This isn’t a toy. It’s alchemy by autocomplete.

Veo 3 is not a filter. It’s a fabrication engine.

You give it a prompt. It gives you cinema. Lighting with depth. Faces with emotion. Camera movement with purpose. If you’ve ever been on set, it feels familiar. If you haven’t, it still feels real.

Dialogue syncs. Soundscapes breathe. Rain sounds like rain. Footsteps echo with weight. Emotions flicker across faces with eerie accuracy. It isn’t perfect, but it’s persuasive.

Early reactions say it all. TechRadar’s Graham Barlow wrote, “These Veo 3 videos mean you can’t trust anything as being real ever again.” Tom May at Creative Bloq confessed, “I’ve spent the last few days watching AI-generated videos that would fool my own mother, and I’m genuinely terrified.”

It’s the start of cinema without cameras.

Why Only Eight Seconds

Eight seconds isn’t the ceiling of ambition. It’s the edge of architecture.

There’s a reason the clips are short.

Veo 3 caps each output at eight seconds. That limit sits plainly in the docs. Every clip arrives at 720p, twenty-four frames per second, and Google throttles both length and request rate.

Why? Partly technical. As time stretches, coherence slips. Faces blur, movements drift, the illusion frays.

Under the hood, Veo 3 almost certainly relies on diffusion models. They start with random noise and, step by step, carve it into moving images, having absorbed billions of examples of human motion, expression, and speech. The system doesn’t animate actors; it invents them, frame by frame and waveform by waveform, straight from text.

The other part is economic. Photoreal synthesis at this fidelity is expensive. Google pays the GPU bill and keeps the faucet half-closed: ten API calls per minute, two clips per call.

Even so, eight seconds can hold wonders. You can add ambient noise, dialogue, and sound effects, all generated on the fly. Physics obey, light breathes, the prompt sticks.

Eight seconds is not a ceiling. It is the limit of the architecture.

For now.

The Collapse of Trust

We’ve entered the era of infinite alibi.

Video used to mean something. It carried weight. Proof, not possibility.

Not anymore.

Veo 3 severed the link between what’s seen and what’s true. A clip, no matter how sharp, is just one version of events. Maybe it happened. Maybe it was typed into existence. Maybe both.

Courts hesitate. Journalists second-guess. Politicians lie louder, because now, every truth has a double.

We’ve entered the era of infinite alibi.

Crime got easier. So did blaming the wrong person. Surveillance lost its grip. Cameras no longer settle disputes. They multiply them. The same tools that once promised safety now offer plausible deniability. For everyone.

A gift to the guilty. A shield for the innocent. And a nightmare for anyone trying to tell them apart.

Solutions are beginning to emerge. Some platforms are experimenting with cryptographic watermarking, embedding invisible signals that prove a video’s origin.

Others are developing AI provenance frameworks, where every generated frame carries a tamper-proof signature.

However, Google’s SynthID watermark, Adobe-Microsoft’s C2PA manifests, and Meta’s own in-house marks all speak different dialects; a tool that can read one often goes blind on the others. Analysts note that this fragmentation forces users to juggle multiple detectors and “reduces accessibility,” leaving plenty of room for bad actors to slip content through the cracks.

Public awareness campaigns, too, aim to teach viewers how to spot synthetic media and verify source integrity.

But these tools are far from universal. Adoption remains voluntary. The tech outpaces the guardrails. And no watermark can force someone to care if the fiction feels better than the truth.

Every fix is temporary. Watermarks get stripped. Detection tools lag by generations. Laws chase the crime, always a step behind.

This isn’t a problem to be solved. It’s a condition to be managed, like spam flooding inboxes or pirated films outpacing takedowns. The forgeries will improve faster than the filters, and the only permanent truth is this: trust must now live outside the frame.

Truth is no longer something you show. It’s something you sell. And in a market where doubt is cheaper than data, the counterfeit will always travel faster than the truth.

Cultural Shockwaves

Cinema has become wish fulfillment.

The casting call is over.

AI doesn’t need headshots.

Extras, background actors, even leads, replaced by code that doesn’t eat, age, or ask for royalties. Commercial performers go first. They won’t be the last.

You don’t need actors. You don’t need gear. You don’t even need money.

With Veo 3, anyone can direct. Type a sentence, and the camera listens. Characters appear. Emotions unfold. The line between filmmaker and fantasist dissolves.

No permits. No crew. No waiting for golden hour. Just prompt and receive.

Cinema has become wish fulfillment.

And the studio? It lives in your browser.

On TikTok and YouTube, a new species is blooming: the AI Fluencer. Flawless skin. Perfect lighting. Daily uploads. No sleep. Their followers don’t care, or don’t know.

Hollywood didn’t open its gates. It was bypassed. The same tools now power indie auteurs and professional liars.

And personal cinema? It’s already here.

Grief clips. Erotic fantasies. Childhood memories reshot with better lighting. A mother’s voice rebuilt from voicemail fragments. A lost pet running through a sunset that never happened.

It’s beautiful.

It’s horrifying.

It’s happening.

Authorship, Consent, and the Ethics Vacuum

Who owns a face?

Who owns a voice conjured by prompt, stitched from data, styled to resemble, but never technically copy, a real person?

Laws exist, but they lag. The TAKE IT DOWN Act criminalizes non-consensual explicit deepfakes. The ELVIS Act protects musicians' voices. California bans political deepfakes near elections. Yet, these are patches on a widening breach.

In 2024, a deepfake video of Ukrainian President Zelensky appeared online, falsely announcing a military surrender. It was crude, but effective enough to sow panic before it was debunked. The next one won’t be so easy to spot.

No comprehensive statute prevents your likeness from starring in films you never made. No universal court precedent shields your voice from uttering words you never spoke.

The tools are neutral. The uses are not.

Propaganda, revenge porn, fake endorsements, deep-nostalgia dreambait, all share the same scaffolding. All arrive with plausible deniability.

Some governments and companies have begun pushing for watermarking standards or AI provenance tracking, but adoption is scattered, enforcement is unclear, and detection tools are often one step behind.

But policy moves slowly, and technology moves fast.

And when the memory looks real enough, it can become one.

The Ambivalence

Freedom came quickly. Meaning didn’t.

This is what the dreamers wanted.

A child with stories in his head no longer needs a camera. Or a crew. Or permission. He needs only words. He can build worlds now, frame by frame, line by line.

The gatekeepers are gone. The wall is down.

But so is the floor.

The same power that frees the imagination corrodes consensus. When anything can be conjured, what can still be trusted? When footage feels sacred but proves nothing, what holds belief in place?

We are freer than ever.

And more adrift.

Hold both truths. Don’t look away.

Life in the Post-Proof World

What was once evidence is now output.

We used to say “roll the tape.”

Now we say “run the prompt.”

The lens recorded. The prompt conjures.

Video isn’t just capture anymore. It’s construction.

What used to be proof is now just product.

In this new world, trust floats. Untethered. Fragile.

Not assumed. Earned.

Not shown. Built.

Authority no longer lives in the image.

It has to come from somewhere else—

from transparency, from repetition, from integrity.

From people.

In May 2025, we lost video as proof.

We haven’t lost truth itself,

but we may need to relearn how to recognize it.

What comes next depends on what we demand, what we accept, and what we choose to believe.

So ask yourself: In the age of infinite fiction, who will you trust and why?